MADMAX

The DLR Morocco-Acquired Dataset of Mars-Analogue eXploration

from the MOROCCO 2018 Field Test

Welcome to our website corresponding to the MADMAX data set presented in the Journal of Field Robotics, 2021.

The data set consists of 36 different navigation experiments, captured at eight Mars analog sites of widely varying environmental conditions. Its longest trajectory covers 1.5 km and the combined trajectory length is 9.2 km

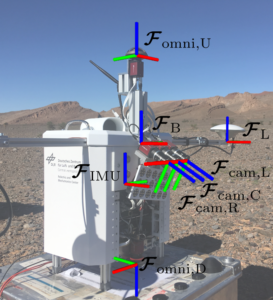

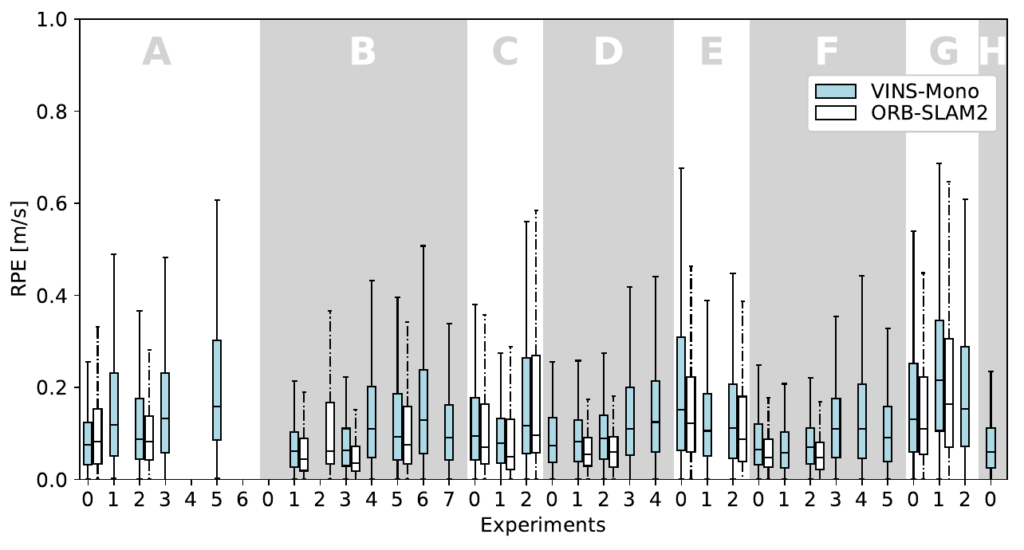

It contains time-stamped recordings from monochrome stereo cameras, a color camera, omnidirectional cameras in stereo configuration, recordings of an IMU, and a 5 DoF Differential-GNSS ground truth.

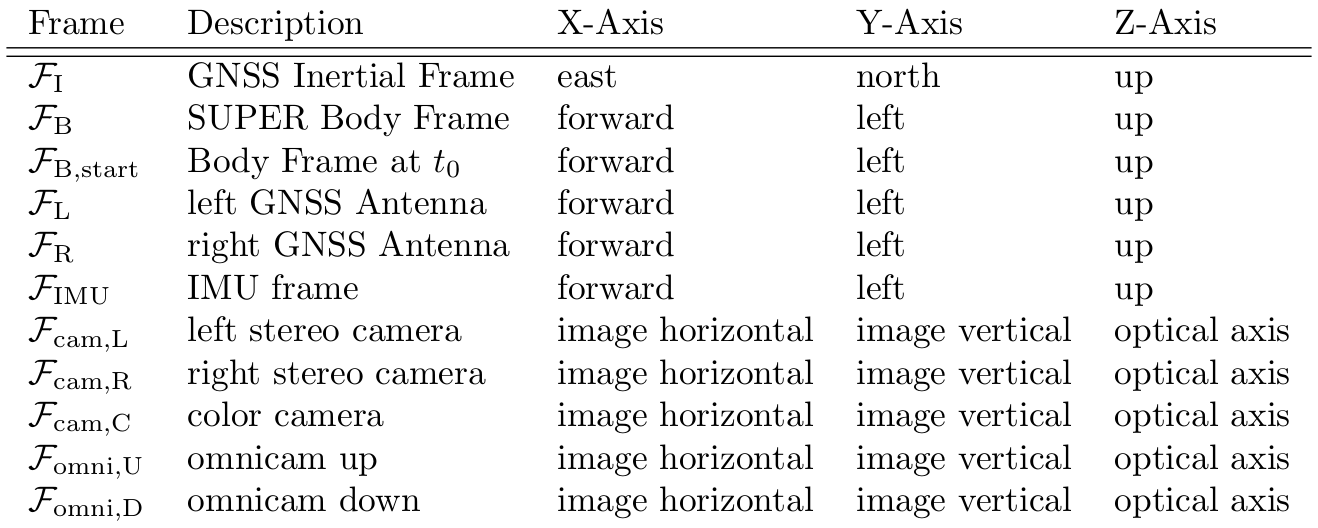

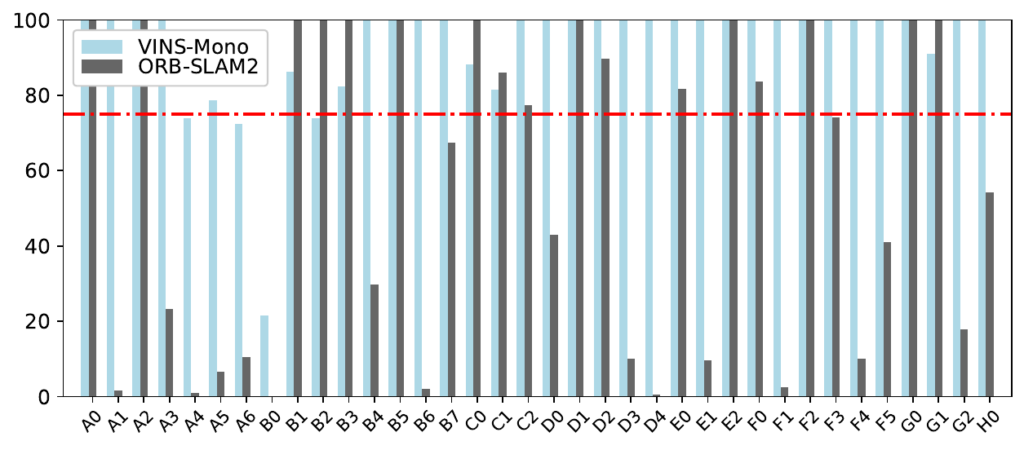

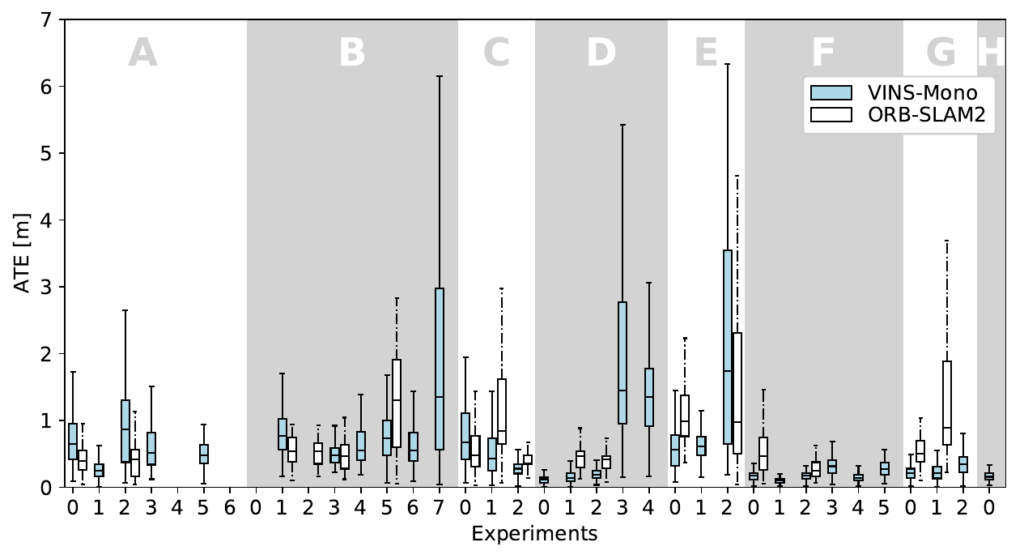

We run two state-of-the-art navigation algorithms, ORB-SLAM2 and VINS-mono, on our data to evaluate their accuracy and to provide a baseline, which can be used as a benchmark for accuracy and robustness for other navigation algorithms.

Corresponding Publication – How to cite us:

BibTex:

@article{https://doi.org/10.1002/rob.22016,

author = {Meyer, Lukas and Smíšek, Michal and Fontan Villacampa, Alejandro and Oliva Maza, Laura and Medina, Daniel and Schuster, Martin J. and Steidle, Florian and Vayugundla, Mallikarjuna and Müller, Marcus G. and Rebele, Bernhard and Wedler, Armin and Triebel, Rudolph},

title = {The MADMAX data set for visual-inertial rover navigation on Mars},

journal = {Journal of Field Robotics},

volume = {n/a},

number = {n/a},

pages = {},

keywords = {exploration, extreme environments, navigation, planetary robotics, SLAM},

doi = {https://doi.org/10.1002/rob.22016},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1002/rob.22016},

}Data-Recording Locations

List of all available Data. Locations A-H from the sites:

- Rissani 1 (N 31.2983, W 4.3871) –> A,B,C

- Kess Kess (N 31.3712, W 4.0518) –> D,E

- Maadid (N 31.5005, W 4.2202) –> F,G,H

Location Overview

Trajectories of the experiments together with the base station locations are shown in Openstreetmaps:

click to enlarge. you will be redirectes to open streetmap.

copyright openstreet map

Please note that all presented data can be downloaded free of charge to be used in an academic environment.

However, registration is required to access the full list of download links.

Sensor Setup

| Sensor Type | Name | Specifications |

| Navigation Cameras | AlliedVision Mako G-319 | 4Hz, monochrome 1032 × 772px images, rectified |

| Color Camera | AlliedVision Mako G-319 | 4Hz, color 2064 × 1544px images, rectified |

| Camera Lenses | RICOH FL-HC0614-2M | 6mm, F/1.4 |

| Omnicam Cameras | AlliedVision Mako G-319 | 4-8Hz, monochrome 2064 × 1544px images |

| Omnicam Lens | Entaniya 280 Fisheye | 1.07mm, F/2.8, 280 ◦ × 360 ◦ field of view |

| IMU | XSENS MTi-10 IMU | MEMS-IMU, 100Hz, three-axis acceleration and three-axis angular velocities |

| GNSS receiver | Piksi Multi GNSS SwiftNav | 1Hz, GNSS Data |

| GNSS antenna | SwiftNav GPS500 | Frequencies: GPS L1/L2, GLONASS L1/L2 and Bei- Dou B1/B2/B3 |

Dataset Structure

All Data clustered by Location X and Run N. The data is provided in human-readable format as text or image files.

Each Run X-N has the following data:

.

├── calibration.zip

│ │ # Calibration info on original images

│ ├── callab_camera_calibration_stereo.txt

│ ├── callab_camera_calibration_color.txt

│ │

│ │ # Calibration info on rectified images

│ ├── camera_rect_left_info.txt

│ ├── camera_rect_right_info.txt

│ ├── camera_rect_color_info.txt

│ │

│ │ # kinematic information – transformations

│ ├── tf__T_to_B_init_pose.csv

│ ├── tf__IMU_to_camera_left.csv

│ ├── tf__IMU_to_camera_color.csv

│ ├── tf__IMU_to_camera_omni_up.csv

│ ├── tf__IMU_to_camera_omni_down.csv

│ └── tf__IMU_to_B.csv

│

├── ground_truth.zip

│ ├── gt_gnss.csv

│ ├── gnss_antenna_base

│ │ ├── gnss_base.obs

│ │ └── gnss_base.nav

│ ├── gnss_antenna_right

│ │ └── …

│ └── gnss_antenna_left

│ └── …

…

.

├── navigation_evaluation.zip

│ ├── orbslam2_nav.csv

│ ├── orbslam2_nav_aligned.csv

│ ├── vins_mono_nav.csv

│ └── vins_mono_nav_aligned.csv

│

├── imu_data.csv

│

├── metadata.txt

│

├── img_rect_left.zip

│ ├── img_rect_left_*.png

│ └── …

├── img_rect_right.zip

│ ├── img_rect_right_*.png

│ └── …

├── img_rect_color.zip

│ ├── img_rect_color_*.png

│ └── …

├── img_rect_depth.zip

│ ├── img_rect_depth_*.png

│ └── …

├── img_omni_up.zip

│ ├── img_omni_up_*.png

│ └── …

└── img_omni_down.zip

├── img_omni_down_*.png

└── …

Rosbags

All data are in universally usable formats, such as plain text files or .png images.

We know that especially rosbags are valuable to the community. We already uploaded specific rosbags to our sever. However, to preserve space, we will upload additional rosbags only on request.

Calibration

The callibration was done with:

DLR CalDe and DLR CalLab – The open-source DLR Camera Calibration Toolbox

The calibration files contain images of the DLR calibration pattern seen by all cameras. The calibration images are found on the download page, together with the calibration results.

Provided files:

- raw images of the calibration pattern

- calibration results („callab_camera_calibration_*.txt“)

- calibration information on the rectified images („camera_rect_*.txt“)

For navigation algorithms with the rectified images, use the camera_rect_*.txt files!

Go to the download page to access the calibration data:

Benchmark

We use the SLAM navigation algorithms VINS-Mono and ORB-SLAM2 for navigation with MADMAX. You are invited to compare your navigation solution against these two algorithms.

We modified the image resolution and the message queue for ORB-SLAM2. See our HOW-TO page for details.

The code: VINS-Mono on Github.com

The paper: VINS-Mono: A Robust and Versatile MonocularVisual-Inertial State Estimator

The code: ORB-SLAM2 on Github.com

The paper: ORB-SLAM2: an Open-Source SLAM System forMonocular, Stereo and RGB-D Cameras

The resulting navigation Data can be downloaded in the download section. The trajectories of VINS/ORBSLAM are shown for each individual experiment under „Location Details“.

The performance of the algorithms is shown here:

Percentage of trajectory completed. Only results with 75%+ completion rate are considered for subsequent analysis.

Navigation Performance: RPE of the navigation algorithms for each experiment.

Navigation Performance: ATE of the navigation algorithms for each experiment.

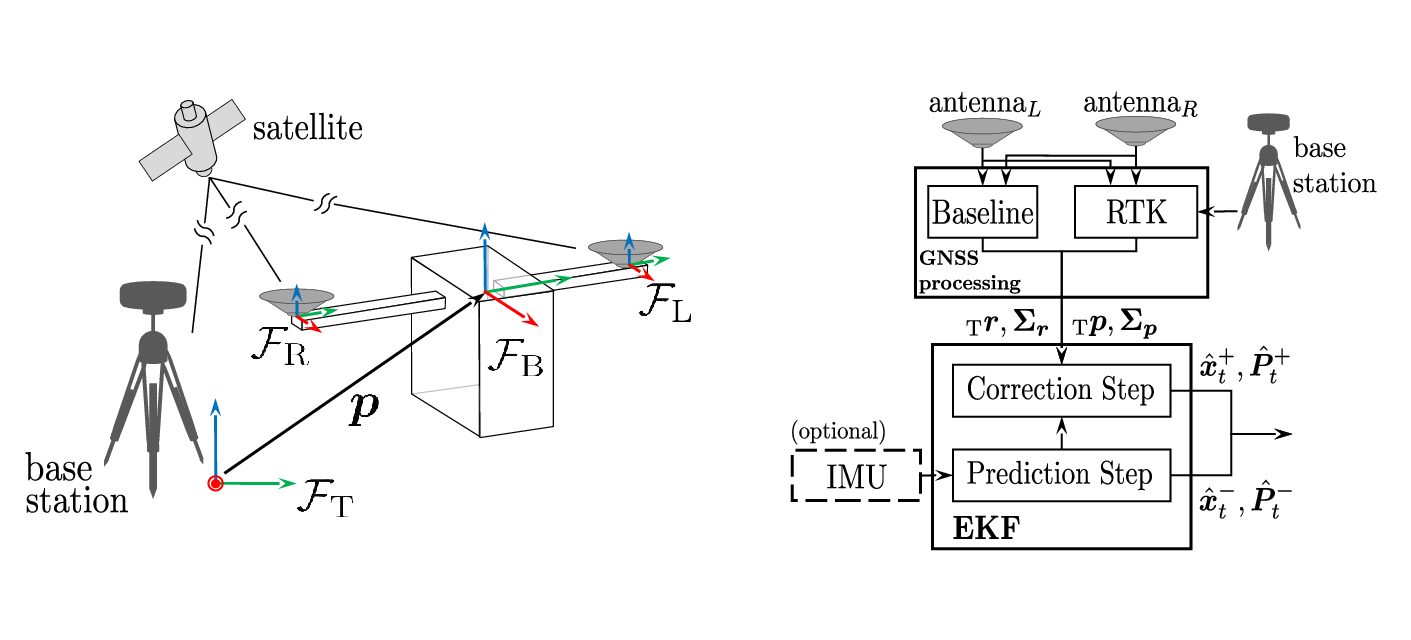

GNSS Ground Truth:

We computed the ground truth using our own algorithms. However, you can use the provided data of the left, right, and base antenna to compute your own ground truth. The provided raw data can be processed using RTKLIB:

RTKLIB: An Open Source Program Package for GNSS Positioning

Our GNSS setup is shown in the figure below (left). We use two antennas with a baseline of 1.285m to calculate the combined ground truth position and orientation of the Body frame B of SUPER, relative to the Topocentric frame T.

Our algorithmic approach to obtain the ground truth is shown below (right). Everything is described in detail in our paper.

Known Issues

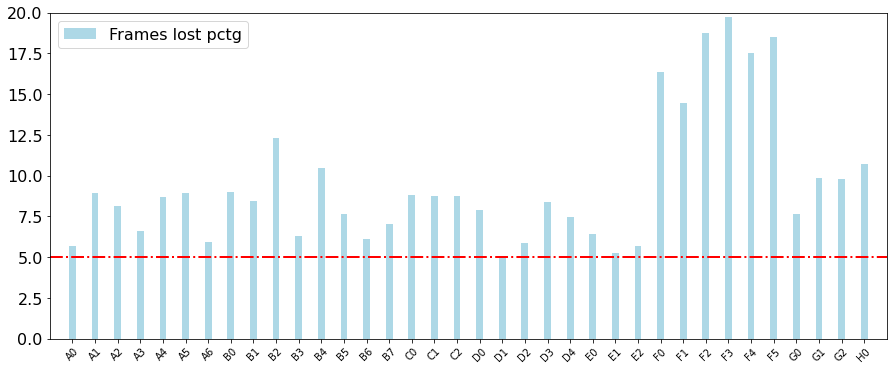

Frame Drops

As discussed in the publication, errors with the Ethernet connection of the cameras caused drops of frames.

How much each experiment was affected is shown below.

Interestingly, our evaluation with ORBSLAM2 and VINS-mono did not establish any correlation between frame-drops and navigation accuracy / robustness.

Percentage of frame drops compared to overall number of frames.

Camera decalibration with the G-runs

During the G runs, the stereo camera intrinsics got decalibrated due to an mechanical impact. This results in slightly inaccurate depth images. SLAM based algorithms can usually handle this and provide correct navigation results.

Statistics

Length and character of trajectories

| Location | Character | ID | Length [m] | Type | |

|---|---|---|---|---|---|

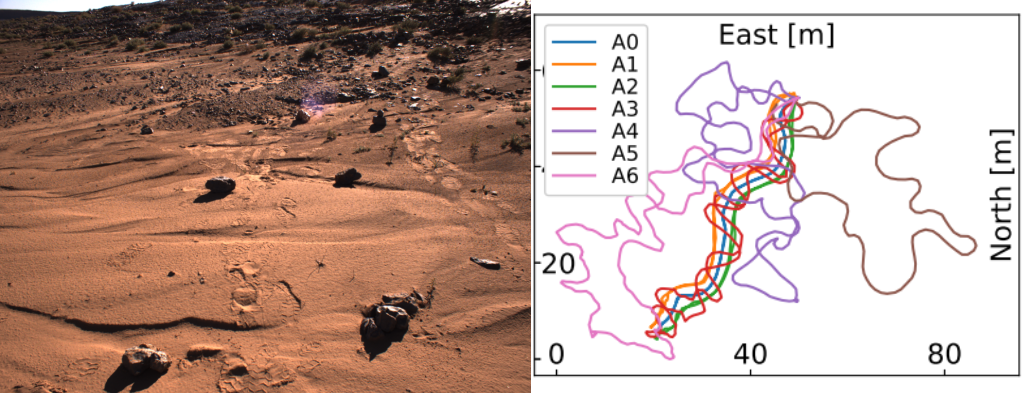

| A | Flat area with stones, rock ridge at the end of the area. Low sun illumination. | A0 | 133 | homing | |

| A1 | 138 | homing | |||

| A2 | 134 | homing | |||

| A3 | 219 | zig‐zag + homing | |||

| A4 | 250 | mapping | |||

| A5 | 200 | mapping | |||

| A6 | 258 | exploration | |||

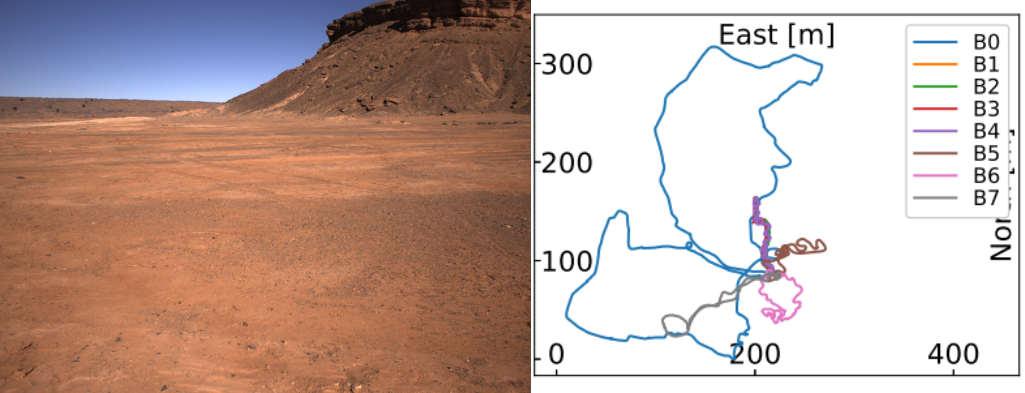

| B | Flat area with sand and pebbles and few big rocks, cliffs visible in the background. | B0 | 1511 | long range nav | |

| B1 | 193 | homing | |||

| B2 | 195 | homing | |||

| B3 | 195 | homing | |||

| B4 | 286 | zig‐zag + homing | |||

| B5 | 301 | mapping | |||

| B6 | 293 | exploration | |||

| B7 | 312 | exploration | |||

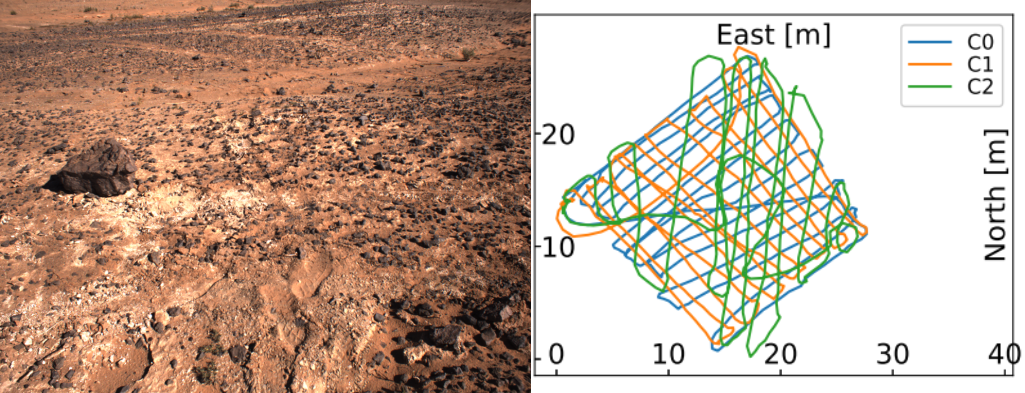

| C | Small flat and square area, half sandy and half stony. | C0 | 341 | zig‐zag | |

| C1 | 321 | zig‐zag | |||

| C2 | 378 | zig‐zag | |||

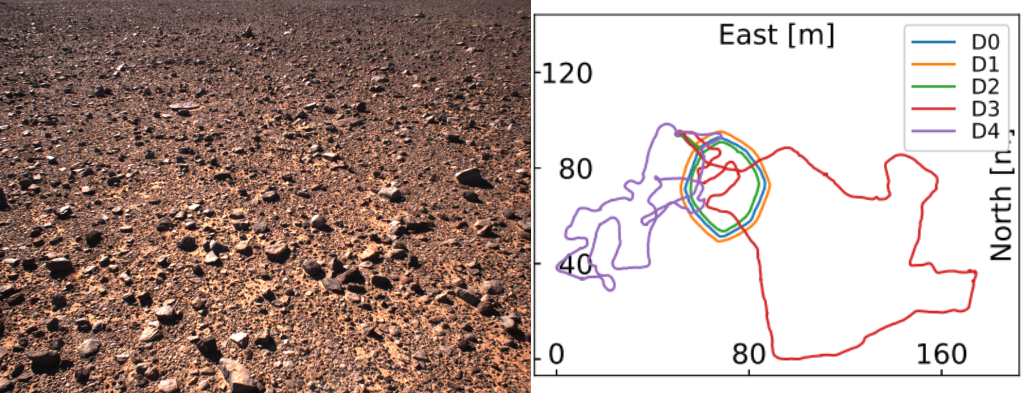

| D | Flat area with small stones and pebbles, hill formation in the background | D0 | 141 | circular homing | |

| D1 | 155 | circular homing | |||

| D2 | 134 | circular homing | |||

| D3 | 493 | long range nav | |||

| D4 | 422 | exploration run | |||

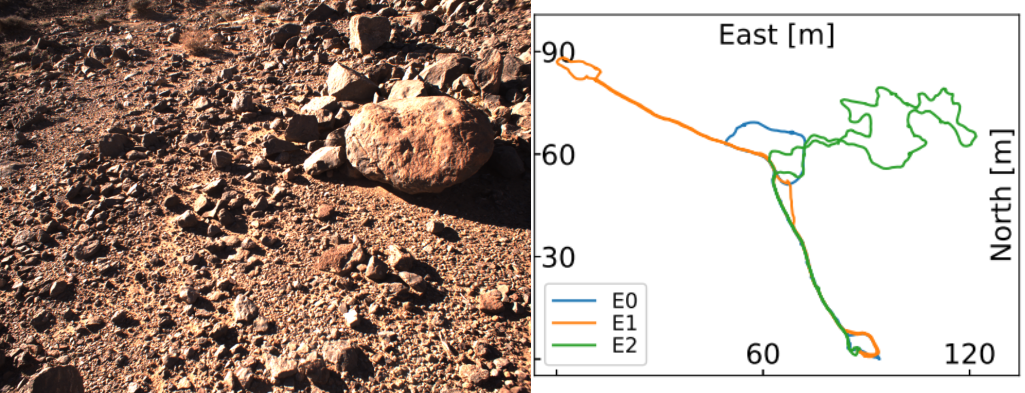

| E | Rough terrain inside a valley and big stones within the traversed path. | E0 | 223 | exploration | |

| E1 | 309 | exploration | |||

| E2 | 374 | exploration | |||

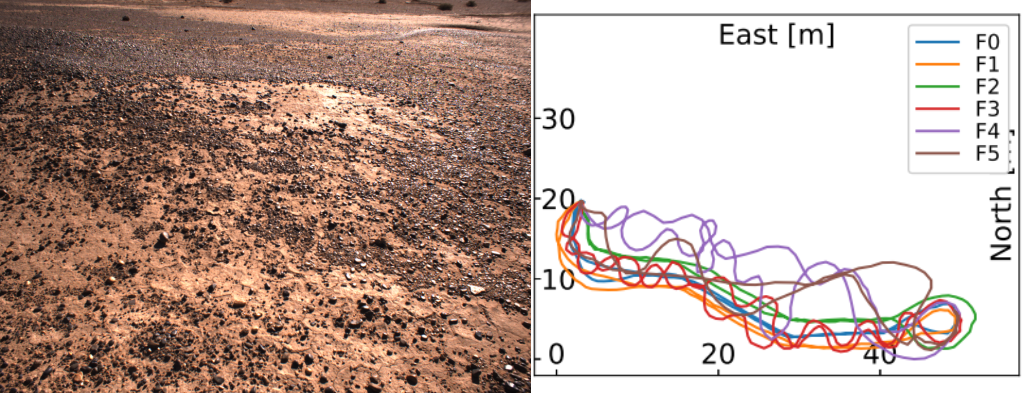

| F | Flat area with small pebbles, rough terrain at the end of the area. | F0 | 121 | homing | |

| F1 | 128 | homing | |||

| F2 | 121 | homing | |||

| F3 | 172 | zig‐zag + homing | |||

| F4 | 167 | mapping | |||

| F5 | 141 | mapping | |||

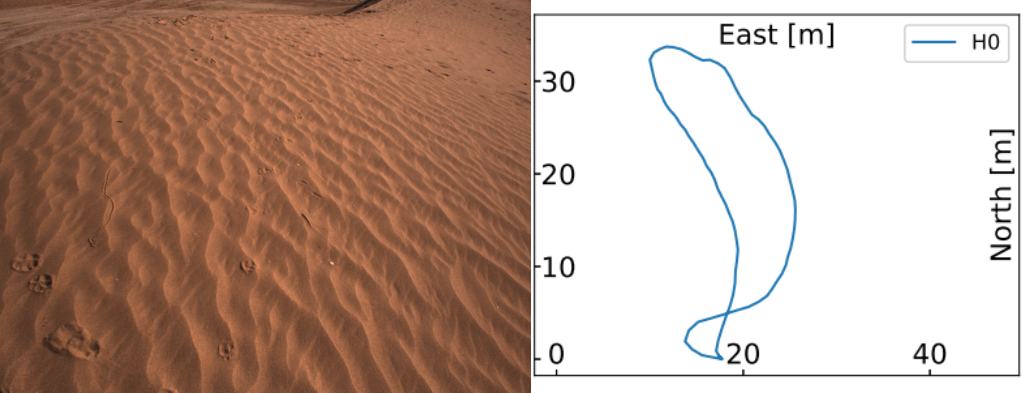

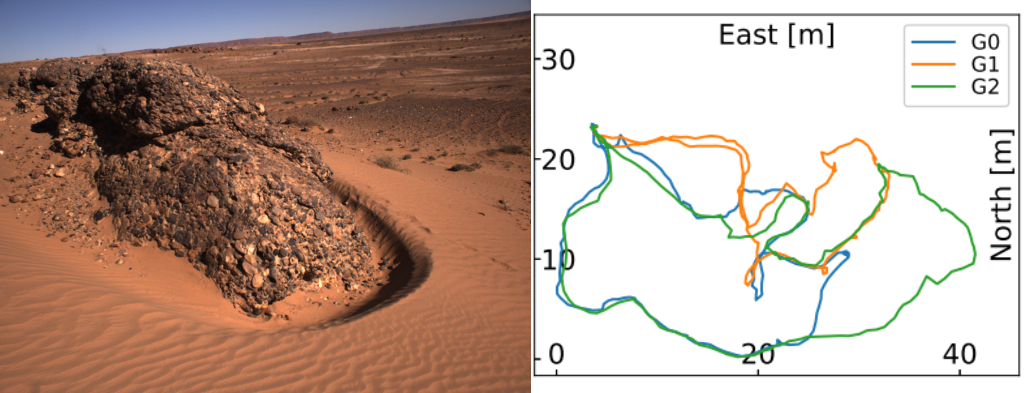

| G | Navigation around big composite rock boulders with sandy surface in between. | G0* | 125 | exploration | |

| G1* | 115 | exploration | |||

| G2* | 154 | exploration | |||

| H | Desert sand dunes. | H0 | 90 | exploration |

* marks trajectories, where a decalibration of the stereo camera extrinsics occurred.

Handheld field testing is representative for Rover navigation

As described in our paper (section 6.3), we conclude representativeness of our data set for planetary rover navigation, as the navigation algorithms have the same accuracy compared to similar Rover-based navigation data sets.

Credits:

This work was funded by the DLR project MOdulares Robotisches EXplorationssystem (MOREX).

This activity has been conducted jointly with the two European Commission Horizon 2020 Projects InFuse and Facilitators. They received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No 730068 and 730014.

Publications:

As mentioned above, if you use the data from the data set in your own work, please cite us in the following way:

, , , , , , , , , , , & (2021). The MADMAX data set for visual‐inertial rover navigation on Mars. Journal of Field Robotics, 1– 21. https://doi.org/10.1002/rob.22016

List of relevant publications:

- Detailed system description of the Lightweight Rover Unit LRU (the technical reference of SUPER):

Schuster, Martin J. et al. (2017) Towards Autonomous Planetary Exploration: The Lightweight Rover Unit (LRU), its Success in the SpaceBotCamp Challenge, and Beyond. Journal of Intelligent & Robotic Systems. Springer. doi: 10.1007/s10846-017-0680-9.

Free Access: https://elib.dlr.de/116749/ - Etna Long Range Navigation Test – Moon-analogue navigation data set with identical sensor setup as SUPER:

Vayugundla, Mallikarjuna et al. (2018) Datasets of Long Range Navigation Experiments in a Moon Analogue Environment on Mount Etna. In: 50th International Symposium on Robotics, pp. 1-7. ISR 2018; 50th International Symposium on Robotics, 20-21 June 2018, Munich, Germany

Free Access: https://elib.dlr.de/124514/ - Other publications related to the overall Morocco Field Test campaign:

- Post, Mark et al. (2018) InFuse Data Fusion Methodology for Space Robotics, Awareness and Machine Learning. In: 69th International Astronautical Congress, Oct 2018, Bremen, Germany; https://hal.laas.fr/hal-02092238

- Lacroix, Simon et al. (2019). The Erfoud dataset: A comprehensive multi‐camera and Lidar data collection for planetary exploration. Proceedings of the Symposium on Advanced Space Technologies in Robotics and Automation-

https://www.laas.fr/projects/erfoud-dataset/

Related Work

Etna Long Range Navigation Test – Moon-analogue navigation data set with identical sensor setup as SUPER

Data Set Website: https://datasets.arches-projekt.de/etna2017/

Publication: https://elib.dlr.de/124514/

Access

To gain access to the full list of sets please fill in. You will receive an email with the details.